14. Cross-Validation¶

K-Fold Cross Validation¶

K-fold cross-validation is a popular technique for estimating a model’s out-of-sample estimation error. It is similar to, but more sophisticated than, the validation set approach that we have considered in examples so far. The process for scoring a model using K-fold cross validation is detailed below.

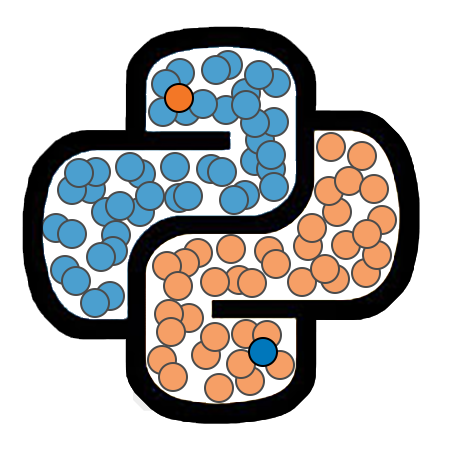

We start by randomly splitting the labeled data into K roughly-equal-sized pieces called folds.

We then train K versions of the model, each using the same set of hyperparameters.

Each version of the model is trained on K-1 folds, validated on the remaining fold. That is to say, we calculate the out-of-sample validation score for each model using the single fold on which that particular model was not trained. Each fold is thus used as the validation set for exactly one model.

This will result in K out-of-sample validation scores. We average these scores together, and report that as the cross-validation score for the model.

We then retrain the model on the entire collection of labeled data that is available to us.

Since the cross-validation score is calculated as the average of several out-of-sample estimates, it tends to provide a more stable estimate of the model’s out of sample performance than that obtained using a single validation set.

Common values for K are 3, 5, 10, and n-1. When K = n-1, we refer to the technique as leave-one-out cross-validation, or LOOCV.

The process of perforning K-Fold cross-validation is illustrated in the figure below.

Scikit-Learn provides us with multiple tools for performing cross-validation. We will explore one of those in this lesson.

Import Packages¶

We will begin by importing a few of the packages we will need in this lesson. The import statements for tools directly related to Cross-Validation will be imported below, near where they are first used.

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

from sklearn.linear_model import LogisticRegression

from sklearn.tree import DecisionTreeClassifier

from sklearn.datasets import make_classification

---------------------------------------------------------------------------

ModuleNotFoundError Traceback (most recent call last)

<ipython-input-1-1feb8ed3a21d> in <module>

1 import numpy as np

2 import matplotlib.pyplot as plt

----> 3 import pandas as pd

4 from sklearn.linear_model import LogisticRegression

5 from sklearn.tree import DecisionTreeClassifier

ModuleNotFoundError: No module named 'pandas'

Generate Data¶

We will illustrate K-fold cross-validation using a synthetic dataset created for a classification problem. The dataset contains 250 observations, each of which will have 6 features, and will be assigned one of 10 possible classes. The features are stored in an array named X, while the labels are stored in an array named y. We start by viewing the contents of X in a DataFrame format.

np.random.seed(1)

X, y = make_classification(n_samples=250, n_features=6, n_informative=6,

n_redundant=0, n_classes=10, class_sep=2)

pd.DataFrame(X)

Let’s now view the first few elements of y.

print(y[:20])

Cross-Validation in Scikit-Learn¶

Scikit-Learn provides many tools for performing cross-validation. One of the most useful is the cross_val_score function. We can import that from the model_selection module in sklearn.

from sklearn.model_selection import cross_val_score

The function cross_val_score takes care of most of the steps in cross-validation for us. It randomly splits the data into folds, and then trains N versions of the model, with each version being trained on a training set that leaves out exactly one of the folds. The function then scores the model on the out-of-sample fold, and then returns a list containing all N out-of-sample scores calculated in this way.

In the cell below, we create an instance of the LogisticRegression class, and then use cross_val_score to perform 5-fold cross-validation. We then print the results for each fold, as well as the average validation accuracy across all of the folds.

lr_model = LogisticRegression(solver='lbfgs', multi_class='multinomial', max_iter=1000)

np.random.seed(1)

cv_results = cross_val_score(lr_model, X, y, cv=5, scoring='accuracy')

print('Validation Accuracy by fold: ', cv_results)

print('Average Validation Accuracy: ', np.mean(cv_results))

Notice that there is significant variation between the accuracy scores calculated on each of the 5 folds. We would get very different impressions of our model’s performance if we evaulated it using Fold 5 as the validation set (obtaining a validation accuracy of 43.75%), or if we instead used Fold 3 as the validation set (obtaining a validation accuracy of 56%).

The purpose of cross-validation is to smooth out this variation in validation scores by calculating an average score, obtaining a more stable, more reliable estimate of the model’s out-of-sample performance.

The greater the number of folds we use, the more reliable our estimate will be. However, using a large number of folds can be computationally expensive. As mentioned above, 5-fold and 10-fold cross-validation are commonly used.

Fitting the Model on the Full Dataset¶

The function cross_val_score fits N version of the model, each of which is trained on a slightly different subset of the training data. It does not, however, automatically retrain the model on the entire training set. We will have to do that ourselves. In the cell below, we train the model and then print the model’s training accuracy.

lr_model.fit(X, y)

print('Training Accuracy:', lr_model.score(X, y))

Notice that the training accuracy we obtain here (58.8%) is higher than the cross-validation estimate we obtained above (50.33%). This is because the cross validation estimate is an average of the model’s accuracy on out-of-sample data, and is thus a better measure of how well the model will perform when we apply it to new data.

Using Cross-Validation Scores to Compare Models¶

Since the cross-validation score is an estimate of how well the model will perform when applied to new data, we should typically select the model with the highest cross validation-score when we are selecting between two or more different models.

In the cell below, we use cross_val_score to evaluate the performance of a decision tree model with max_depth=32.

dt_model = DecisionTreeClassifier(max_depth=32, random_state=1)

np.random.seed(1)

cv_results = cross_val_score(dt_model, X, y, cv=5, scoring='accuracy')

print('Validation Accuracy by fold: ', cv_results)

print('Average Validation Accuracy: ', np.mean(cv_results))

The decision tree model had a significantly higher score than the logistic regression model. Therefore, we would likely prefer the decision tree model. We will end this lesson by training the decision tree model on the full training set, and then calculating its training accuracy.

dt_model.fit(X, y)

print('Training Accuracy:', dt_model.score(X, y))